“Outliers are the only thing standing between us and madness.”

I scribbled this quote in my notebook during a talk I attended last weekend. The event was a conference on “Living as humans in the machine age.”

I heard about the conference from my son, a college student at the University of Wisconsin-Madison. He attended the conference with me, and at lunch time, he swiped me into his cafeteria. As we sat eating our enchilada bake and fruit salad, our conversation meandered back through what we’d heard, settling on this quote.

The quote came from a speaker we agreed was one of our favorites at the conference, Cassandra Nelson, a research fellow at the University of Virginia. She used the word madness as a play on words, tying back to something scientists have recently discovered about artificial intelligence.

MAD stands for Model Autophagy Disorder. It describes a disconcerting phenomenon in Artificial Intelligence. If you’ve been keeping up with AI news, you’ve likely heard that most AI today operates through what’s called a large language model. It works by chewing up unfathomable heaps examples from human language. Then when asked to perform a task, the model spits out one word it finds most likely, followed by the word most likely to follow that one, and so on until paragraphs form. It does not have intelligence so much as word-prediction skills.

But now imagine what happens if instead of feeding the AI model more human language, you feed back its own outputs—language generated by AI. If you repeat the regurgitating process just a few more times, it starts spitting out meaninglessness. The AI has, in a sense, autophaged—eaten itself—into madness. It’s gone MAD.

There’s a simple reason MAD happens, as Dr. Nelson pointed out. In each iteration of processing language, AI chooses from the most likely, the median. It ignores the outliers.

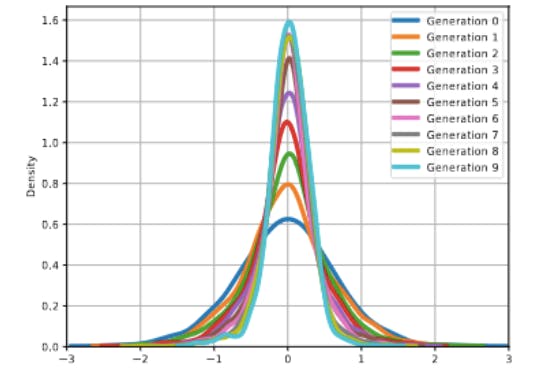

|

Graph of MAD process by Nicolas Papernot |

Imagine the graph of the likelihood of all possible words I might choose begin the next sentence. Some options would fit in the center of most likely options: The… There… If… I… But there are many other words I could type. I could, for example, type Tails. Like this: Tails along the edges of the graph, the rarest possible options, are eliminated by AI. Without those tails, it loses the beautiful variability that makes humans human—and with us our language.

We need outliers not just for AI. Malcom Gladwell famously argued so in his book Outliers, but social scientists have been making that argument for a long time.

Over lunch, my son warmed my anthropologist-mother’s heart by recounting something he’d learned in his sociology class. He said Emile Durkheim also argued for the importance of outliers—what Durkheim called deviance. Durkheim noticed that behaviors on the fringes of society are important because they pressure society to figure what values they share, even if by deciding what to reject as deviant. Society holds itself together in part by chastising what it doesn’t like.

But there’s a more positive side of deviance, too. Society also needs deviance from norms because that’s how we discover new possibilities. It’s how the cultures change. As a speaker later that afternoon said, when the center is broken, you have to go to the margins. It’s where all new cultural formations come from.

That speaker, Paul Kingsnorth, went on to say that solving the deepest social problems requires new cultural formations, and “this is multigenerational work.”

Mulling over how to create a better society with my son, I felt a deep gratitude for the chance to participate in this multigenerational work. When he was young, I used to lie awake at night worrying about how to raise my children well. Now that my kids have moved on to college, I find myself awake at night more often worrying about the whole society that their generation will soon lead. It helps knowing that you all are in on this multigenerational work, too.

To do that work, we have to feed on the truth, and the whole truth. We have to take outliers seriously, writing them not only into our language models but into our lives. We can’t settle for letting the machines or the folks closest to the median tell us what should be the next word in our sentences. We have to continue the hard work of thinking for ourselves, which doesn’t have to mean rejecting AI altogether, but it does mean keeping up the practice of appreciating the whole wide spread of humanity.

Thanks for being a part of that important work.

|

|